Introduction: 2025 happened, but the real questions started in 2026

From 17 January 2025, DORA (the Digital Operational Resilience Act) stopped being “an IT regulation on paper” and became day-to-day reality for EU financial entities. By 2026, most organisations have already felt it in practice: early reviews, first audit cycles, tabletop exercises, board reporting. The conversation is no longer about impressive demos. It’s about control.

By the end of 2025, many banks and insurers had launched dozens of GenAI pilots: customer support chatbots, analyst assistants, document generation, internal knowledge search (RAG), developer copilots, and the first agentic workflows. In 2026, the first full “year of living under DORA” kicks in, where what matters is whether you can answer simple but uncomfortable audit questions:

- Who made the decision, and on what basis?

- Which data was used, where did it come from, and was it permitted?

- What happens if your model, supplier, or cloud platform is unavailable?

- Can you demonstrate control over third parties and subcontracting chains?

- Is your Register of Information actually current, or just a “final_final_v7.csv” file?

If 2024–2025 allowed for sandbox experimentation, 2026 is when many GenAI pilots suddenly look less like innovation and more like operational risk: hard to explain, difficult to reproduce, and even harder to defend in an audit.

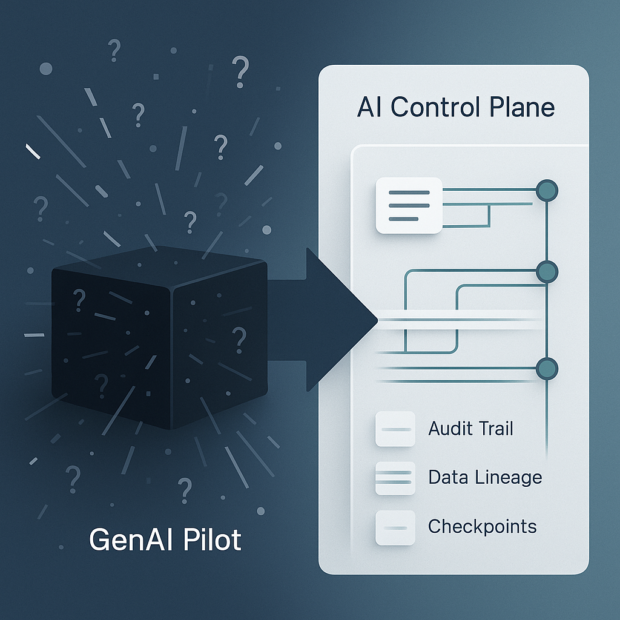

Below, we’ll look at where typical GenAI architectures clash with DORA expectations — and why organisations need not just “more logs”, but a proper control layer. At Intellectum Lab, we address this with Intellectum Lab AI Control: an AI control plane for GenAI in financial services, built for auditability, reproducibility, and exit readiness under DORA.

1) DORA changed the question from “why” to “how will you prove it?”

Previously, AI initiatives were often justified through efficiency: faster customer responses, reduced contact-centre workload, and automating routine work. DORA doesn’t argue with the value. It asks something different:

- How are you managing ICT risk if this supports a critical or important function?

- How are you controlling third parties and subcontracting?

- How do you ensure continuity if a supplier changes or becomes unavailable?

- Where is the evidence that your controls work?

And this is where GenAI meets reality: even with careful engineering, models remain probabilistic, dependency chains are complex, and “we log everything in JSON” isn’t the answer auditors are looking for.

2) Where a typical GenAI pilot conflicts with DORA expectations

2.1 Accountability doesn’t transfer

As soon as GenAI starts influencing customers, operations, risk, or decisions, the idea of “it’s the model provider’s problem” stops working. Under DORA, responsibility stays with the financial entity.

The issue isn’t that vendors are “bad”. The issue is that LLMs are non-deterministic by nature — and without the right architecture you cannot explain outcomes at the level expected by risk, compliance, and audit functions.

2.2 Multi-vendor in principle, lock-in in practice

Many pilots end up tightly coupled to a single provider or model:

- prompts and agent chains are tuned to specific model behaviour,

- embeddings and RAG indexes depend on a particular embedding model,

- safety and policy controls are often bolted onto one API in an ad-hoc way.

In 2026, this hurts more, because exit strategies and business continuity shift from “a paragraph in a policy” to an exercise scenario: “What do we do if this provider is unavailable tomorrow?“

And “we’ll rewrite it in a week” sounds like a plan that won’t survive scrutiny.

2.3 Register of Information vs Shadow AI

The most underestimated risk is unofficial GenAI usage by employees. In large organisations it emerges naturally: people find a tool that speeds up work and start using it before procurement, policy, and approvals catch up.

Under DORA, this is not a moral debate. It’s a governance problem:

- a service is being used → that’s an ICT dependency,

- the dependency isn’t recorded → the register is incomplete,

- you can’t evidence control → the risk isn’t controlled.

By 2026, as organisations move from “preparing” to “operating the process”, Shadow AI becomes a frequent source of unpleasant surprises.

2.4 Subcontracting and the AI supply chain

GenAI supply chains are almost always multi-layered: application/integrator, model provider, cloud platform, infrastructure, and supporting services (moderation, vector databases, orchestration), plus occasionally “invisible” subcontractors.

If you cannot show which services actually participate in processing, where data flows, and where the control points are, the audit quickly becomes a guessing game: “What isn’t documented?“

3) The core mismatch: audit expects reproducibility, GenAI defaults to probability

Audit and control functions like repeatability: the same input should yield the same output — or at least a predictable range.

GenAI does not behave that way by default. Even if you set the temperature to zero, you still face:

- model version changes,

- changes to system prompts and policies,

- drift in RAG data and retrieval results,

- changes in underlying knowledge sources,

- different context and tool-calling chains (especially in agentic workflows).

So the critical question becomes: What exactly happened at decision time — using which data, which model version, in which context, under which policies and filters?

If you can’t answer that, you’re exposed both in audits and during real incidents.

4) Why “bolt-on compliance” no longer works

Many teams try to “add compliance later”:

- “we’ll add logging”,

- “we’ll produce reporting”,

- “we’ll plug in standard APM tooling”.

But standard logs answer “did the request succeed?”, while DORA increasingly cares about “was the outcome correct and safe?”:

- was any personal data exposed,

- were prohibited sources used,

- did the chatbot make commitments the firm can’t honour,

- did the model hallucinate material facts,

- can you evidence that the risk is being managed?

That leads to a practical 2026 conclusion: if GenAI is part of real processes, traceability and control must be built in as a mandatory layer, not added as an afterthought.

5) Intellectum Lab AI Control: a mandatory observability and control layer for GenAI under DORA

Rather than talking in slogans, it helps to be direct: financial organisations need an AI control plane — a mandatory observability and control layer that sits across GenAI systems, so DORA requirements can be evidenced, not just described.

Intellectum Lab AI Control is a product from Intellectum Lab designed to keep GenAI in financial workflows controllable — delivering auditability, reproducibility, and exit readiness in a DORA context.

What this looks like in practice:

Per-request audit trail

Intellectum Lab AI Control captures the full context: who initiated the request, what prompt was sent, which RAG sources were retrieved, what generation parameters were used, which model/version ran, what output was produced, and how policy controls affected it.

The aim is simple: any material output should be reconstructable step by step.

Data lineage and dependency mapping

Once you have multiple external services, proxies, moderation, vector databases, orchestration layers, multiple models, and multiple access paths, “what calls what” stops being obvious. Intellectum Lab AI Control helps you understand the actual processing chain and dependencies—critical for third-party and subcontracting oversight.

Guardrails before it becomes an incident

Controls before and after generation: policies for data handling, PII, restricted topics, output format, and business constraints (for example, “do not quote interest rates that are not in the product catalogue”).

Because in a DORA environment, a single incorrect customer response can cost far more than a technical uptime metric.

Model-agnostic approach for exit strategies

Exit readiness is one of the hardest parts. In critical scenarios you can’t treat one model as your only option. Intellectum Lab AI Control supports an architecture where switching models (or switching mode: cloud/on-prem) is technically feasible without rewriting the entire business logic.

The point isn’t to make AI “perfect”. The point is to manage risk and produce evidence of control when audit questions arrive — or when something goes wrong.

6) A practical 2026 roadmap

In simplified terms, a workable plan for organisations that want to scale GenAI without clashing with DORA tends to look like this:

- Classify use cases honestly

If it affects customers, operations, or risk, it isn’t “just a pilot” anymore. - Add a mandatory observability and control layer early

Not after the MVP. As part of the architecture from the start. - Get the register and real dependencies in order

Not just contracts in a folder, but real calls, real services, real integrations. - Test exit strategies through exercises

Not a document. A scenario: “what happens on day X?”, who switches the fallback on, what constraints you accept, and how you keep serving customers. - Treat Shadow AI as a signal

Understand where people already get value — and move it into secure, governed corporate workflows.

Conclusion

In 2026, GenAI in financial services is maturing. It’s no longer a contest of “who has the smartest chatbot”. It’s about trust, resilience, and control.

DORA doesn’t ban GenAI. It effectively bans the approach of “we don’t really know what’s happening, but it seems to work”.

If you want GenAI to be part of critical processes, you need a control layer that delivers evidence and repeatability — and the ability to operate with a plan B when a supplier or model becomes unavailable.

Intellectum Lab AI Control is built for exactly that: an AI control plane for GenAI in finance, delivering auditability, reproducibility, and exit readiness under DORA.

If you’d like, we can walk through what this looks like on real architectures (RAG, agents, contact-centre flows, internal copilots) and show how Intellectum Lab AI Control adds the mandatory observability layer that turns a “black box” into a governed system with a clear audit trail.

Works cited:

- Getting ready for DORA: Management of ICT risk for third-party providers – AFM, accessed December 13, 2025, https://www.afm.nl/~/profmedia/files/rapporten/2023/update-dora-2-en.pdf

- Digital Operational Resilience Act (DORA), Article 28, accessed December 13, 2025, https://www.digital-operational-resilience-act.com/Article_28.html

- MIT Report Finds 95% of AI Pilots Fail to Deliver ROI, Exposing “GenAI Divide” – Legal.io, accessed December 13, 2025, https://www.legal.io/articles/5719519/MIT-Report-Finds-95-of-AI-Pilots-Fail-to-Deliver-ROI-Exposing-GenAI-Divide

- L_2022333EN.01000101.xml – EUR-Lex – European Union, accessed December 13, 2025, https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:32022R2554

- While 84% of financial firms believe that the failure to adopt AI will negatively impact them, few are ready with DORA-related core processes and data management – PwC Luxembourg, accessed December 13, 2025, https://www.pwc.lu/en/press/press-releases-2025/dora-laying-the-groundwork.html

- Regulation (EU) 2022/2554 of the European Parliament and of the Council of 14 – Publications Office, accessed December 13, 2025, https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32022R2554

- Maximizing compliance: Integrating gen AI into the financial regulatory framework | IBM, accessed December 13, 2025, https://www.ibm.com/think/insights/maximizing-compliance-integrating-gen-ai-into-the-financial-regulatory-framework

- Digital Operational Resilience Act (DORA) | Updates, Compliance, Training, accessed December 13, 2025, https://www.digital-operational-resilience-act.com/

- An overview of model uncertainty and variability in LLM-based sentiment analysis: challenges, mitigation strategies, and the role of explainability – PMC – PubMed Central, accessed December 13, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12375657/

- Invisible Tokens, Visible Bills: The Urgent Need to Audit Hidden Operations in Opaque LLM Services – OpenReview, accessed December 13, 2025, https://openreview.net/pdf?id=hK18UPBZvu

- Technological Aspects of Generative AI in the Context of Copyright – European Parliament, accessed December 13, 2025, https://www.europarl.europa.eu/RegData/etudes/BRIE/2025/776529/IUST_BRI(2025)776529_EN.pdf

- Mastering AI agent observability: A comprehensive guide | by Dave Davies – Medium, accessed December 13, 2025, https://medium.com/online-inference/mastering-ai-agent-observability-a-comprehensive-guide-b142ed3604b1

- European Commission rejects DORA RTS on Subcontracting – Bird & Bird, accessed December 13, 2025, https://www.twobirds.com/en/insights/2025/germany/european-commission-rejects-dora-rts-on-subcontracting

- The European Commission rejects draft Regulatory Technical Standards on subcontracting under the Digital Operational Resilience Act – Hogan Lovells, accessed December 13, 2025, https://www.hoganlovells.com/en/publications/the-european-commission-rejects-draft-regulatory-technical-standards-on-subcontracting-under-the-digital-operational-resilience-act

- Joint Regulatory Technical Standards on subcontracting ICT services supporting critical or important functions | European Banking Authority, accessed December 13, 2025, https://www.eba.europa.eu/activities/single-rulebook/regulatory-activities/operational-resilience/joint-regulatory-technical-subcontracting

- DORA – Dentons, accessed December 13, 2025, https://www.dentons.com/ru/insights/articles/2025/august/12/dora

- Final RTS on Subcontracting under DORA has been published – Bird & Bird, accessed December 13, 2025, https://www.twobirds.com/en/insights/2025/germany/endg%C3%BCltige-rts-zu-unterauftr%C3%A4gen-gem%C3%A4%C3%9F-dora-wurde-ver%C3%B6ffentlicht

- From risk to reward: The dual reality of agentic AI in the enterprise – IDC, accessed December 13, 2025, https://www.idc.com/resource-center/blog/from-risk-to-reward-the-dual-reality-of-agentic-ai-in-the-enterprise/

- The rise of agentic AI part 7: introducing data governance and audit trails for AI services – Dynatrace, accessed December 13, 2025, https://www.dynatrace.com/news/blog/the-rise-of-agentic-ai-part-7-introducing-data-governance-and-audit-trails-for-ai-services/

- What is data lineage? And how does it work? – Google Cloud, accessed December 13, 2025, https://cloud.google.com/discover/what-is-data-lineage

- What is Digital Operational Resilience Act (DORA) Compliance? – Securiti, accessed December 13, 2025, https://securiti.ai/dora-compliance/

Leave a Reply